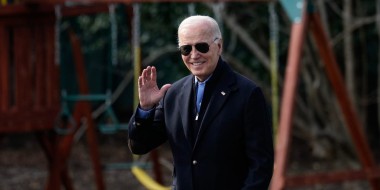

The White House wants to 'cryptographically verify' videos of Joe Biden so viewers don't mistake them for AI deepfakes

The White House wants to 'cryptographically verify' videos of Joe Biden so viewers don't mistake them for AI deepfakes::Biden's AI advisor Ben Buchanan said a method of clearly verifying White House releases is "in the works."